What is containerization? envision a moving truck that is filled with unorganized items rather than neatly packed boxes. In this situation, locating specific items and unpacking would require considerable time and effort. Conversely, if the truck were filled with labeled boxes, the unloading process would be greatly streamlined.

This illustration highlights a fundamental concept: the use of containers significantly accelerates the loading and unloading process. This analogy also sheds light on the rising popularity of containerization within the DevOps framework.

By utilizing containerization, developers can construct and test applications more efficiently, eliminating the need to wait for a complete system installation. In this article, we will explore containerization in detail and examine the advantages it offers to developers.

What are Containers?

A container is a standardized software unit that operates independently of the underlying operating system. It encapsulates the application code along with all necessary dependencies, allowing it to be moved and executed seamlessly across different environments. The state of a container is efficiently captured in a lightweight image, which is self-sufficient and easily transferable, containing everything required for the application to function, including code, runtime, system tools, libraries, and configuration settings. Containers guarantee consistent performance regardless of the infrastructure they are deployed on, effectively isolating the software from its environment and ensuring uniform operation across various stages, such as development and staging.

What Is Containerization in DevOps?

Containerization involves encapsulating software code along with its dependencies and an operating system into a self-sufficient application that can operate on different computing systems. These virtual environments are intentionally designed to be lightweight, demanding minimal computing resources. They can function on any underlying infrastructure, making them portable and capable of running consistently across various platforms.

By consolidating application code, configuration files, operating system libraries, and all necessary dependencies, containers address a prevalent challenge in software development: code that works in one environment may encounter bugs and errors when moved to another. For example, a developer might create code in a Linux setting and later find it malfunctioning when transferred to a virtual machine or a Windows system. In contrast, containers operate independently of the host infrastructure, ensuring uniform development environments.

The true advantage of containers lies in their ease of sharing. Utilizing container images—files that serve as snapshots of the container’s code, configuration, and other relevant data—allows for the rapid creation of consistent environments throughout the software development lifecycle (SDLC). This capability enables organizations to establish reproducible environments that are efficient and straightforward to manage from development to testing and into production.

Software is composed of various components, and containerization effectively consolidates an application’s essential elements into a single, well-organized package. By employing containerization, developers can bundle a program’s code, runtime engine, tools, libraries, and configurations into a portable “container.” This approach reduces the resources needed for operation and simplifies deployment in new environments.

Real-World Example:

Consider a shipping container. It is designed to carry a range of products (your application code) and is equipped with all necessary items for transit (dependencies) – such as packing tape (configuration files) and labels (environmental variables). This container can be placed on various vessels (operating systems) and navigate through different ports (infrastructure) without impacting the contents within. Similar to how the container protects the products during their journey, containerization ensures that your application operates reliably across different environments.

Virtualization vs. containerization: What’s the difference?

Containers are often likened to virtual machines (VMs) because both technologies abstract operating systems from the underlying hardware and can serve similar purposes. However, there are key distinctions in their operational mechanisms.

Virtualization allows organizations to simultaneously run various operating systems and applications on the same infrastructure, utilizing shared computing resources. For instance, a single server can host both Windows and Linux VMs, with each VM functioning as an independent, isolated computing environment that utilizes the server’s resources.

On the other hand, containerization optimizes resource usage by packaging code, configuration files, libraries, and dependencies without including the entire operating system. Instead, it relies on a runtime engine on the host machine that allows all containers to share the same underlying OS. When deciding between containers and VMs, it is essential to consider these technical differences. For instance, long-running monolithic applications may be more appropriate for VMs due to their stability and long-term storage capabilities. Conversely, lightweight containers are ideally suited for a microservices architecture, where the overhead associated with multiple VMs would be inefficient.

Containerization:

- Containers use the host OS, meaning all containers must be compatible with that OS.

- Containers are lightweight, taking only the resources needed to run the application and the container manager.

- Container images are relatively small in size, making them easy to share.

- Containers might be isolated only very lightly from each other. A process in one container could access memory used by another container, for example.

- Tools such as Kubernetes make it relatively easy to run multiple containers together, specifying how and when containers interact.

- Containers are ephemeral, meaning they stay alive only for as long as the larger system needs them. Storage is usually handled outside the container.

Virtualization:

- VMs are effectively separate computers that run their own OS. For example, a VM can run Windows even if the host OS is Ubuntu.

- VMs emulate a full computer, meaning that they replicate much of the host environment. That uses more memory, CPU cycles, and disk space.

- VM images are often much larger as they include a full OS.

- By running a separate OS, VMs running on the same hardware are more isolated from one another than containers.

- Configuration management tools, such as Terraform or Ansible, automate VM deployment and integration.

- VMs tend to have longer lives and include a full file system of their own.

The benefits of containerization in DevOps

Central to DevOps are streamlined, repeatable processes that automate the software development lifecycle. Nevertheless, contemporary applications are becoming more intricate, especially as they expand to incorporate various services. Containers play a crucial role in mitigating this complexity by enhancing standardization and repeatability, which leads to a more rapid, higher-quality, and efficient software development lifecycle.

The advantages of containerization encompass:

- Portability: Minor variations in the underlying environment can significantly affect code execution. This is why the phrase “It works on my machine” often lacks significance and is frequently used humorously. It also explains the persistent aspiration of achieving “write once, run anywhere” among those striving to enhance software development methodologies. Containers facilitate this by encapsulating all necessary components of an application into uniform and portable environments, thereby simplifying the standardization of application performance.

- Scalability: Containers can be deployed and configured to interact within a broader system architecture using orchestration management tools like Kubernetes. These tools also enable the automation of new containerized environment provisioning to meet real-time demand. Consequently, well-configured containerized environments can be swiftly scaled up or down with minimal to no human intervention.

- Cloud-agnostic: When designed for portability, containers can operate on any platform—be it a laptop, bare metal server, or cloud service. By abstracting the differences between underlying platforms, containers reduce the risk of vendor lock-in. They also allow for the execution of applications across various cloud platforms, enabling seamless transitions between providers.

- Integration into the DevOps pipeline: Containerization platforms are typically built to fit into broader automation workflows, making them particularly suitable for DevOps. Continuous Integration/Continuous Deployment (CI/CD) tools can automatically create and destroy containers for various tasks, including testing and production deployment.

- Efficient use of system resources: In contrast to virtual machines, containers are generally more resource-efficient and impose less overhead. They do not require a hypervisor or an additional operating system native to the container. Instead, container tools provide just enough structure to create a self-sufficient environment that optimally utilizes system resources.

- Accelerate Software Releases: Containers streamline the management of larger and more intricate applications by breaking down their codebases into smaller, collaborative run-time processes. This approach allows organizations to expedite each phase of the Software Development Life Cycle (SDLC), as it enables developers to concentrate on specific components of an application rather than the entire codebase.

- Enhanced Flexibility: Containers introduce significant flexibility into the SDLC, allowing organizations to swiftly allocate additional computing resources in response to real-time demands. They are frequently utilized to establish redundancies, thereby enhancing application reliability and ensuring higher uptime.

- Increased Application Reliability and Security: By integrating the application environment into the DevOps pipeline, containers undergo the same quality assurance processes as the rest of the application. While containers operate in conjunction, their isolated environments facilitate the identification of issues within one segment of the application without affecting the overall system.

- Effective Version Control: Container images can be versioned and stored in repositories, enabling DevOps teams to monitor changes, revert to earlier versions, and collaborate more effectively.

Disadvantages of Containerization

When developing an application, you may be contemplating the use of containerization. Before fully committing to a container-based approach, it is important to consider some of the potential drawbacks. These include:

- Security vulnerabilities. Although this approach safeguards against infected containers affecting others, the overall containerization process necessitates enhanced security measures. Furthermore, debugging containers can be challenging due to their restricted visibility into the host system.

- Orchestration requirements. As noted by Veritas, managing containers typically involves utilizing several orchestration tools, whereas a single orchestrator suffices for virtual machines.

- Greater complexity. The proliferation of containers and additional layers increases the burden on developers to monitor and manage. Moreover, not every component of an application can be containerized, which adds to the overall complexity.

Best Practices for Containers and DevOps

To fully leverage the advantages of containerization within DevOps workflows, it is crucial to adhere to these best practices.

- Utilize consistent and unchanging container images generated from Docker files or similar configuration files to ensure repeatability and uniformity.

- Implement automation for the building, testing, and deployment of containerized applications through CI/CD pipelines, facilitating continuous integration and delivery.

- Leverage container orchestration platforms such as Kubernetes or Docker Swarm to oversee and scale container applications. Regularly monitor performance, resource consumption, and health metrics to identify and resolve issues promptly.

- Adopt security best practices, including least privileged access, vulnerability scanning of images, network segmentation, and encryption, to safeguard containerized applications and their underlying infrastructure.

- Optimize container resource limits, such as CPU and memory allocations, to achieve optimal performance and resource utilization. Configure auto-scaling to adjust resources based on demand.

- Develop robust backup and disaster recovery strategies for containerized data to maintain business continuity in the event of failures or outages.

Provide training and documentation for DevOps teams on containerization principles, best practices, and tools to enhance their effective utilization.

What are containerization use cases?

- Cloud Migration (Lift-and-Shift): Containerization serves as an effective solution for organizations transitioning legacy applications to the cloud. By encapsulating existing applications in containers, companies can take advantage of the scalability and flexibility offered by cloud platforms without the need for significant code modifications. This “lift-and-shift” strategy facilitates a more seamless migration to the cloud while establishing a foundation for future modernization initiatives.

- Microservices Architecture: Containerization aligns seamlessly with the microservices architecture, a widely adopted method for developing contemporary cloud applications. This approach breaks down complex applications into smaller, self-sufficient services, each tasked with a specific function. Containers offer a lightweight and portable means to package these microservices, allowing for independent development, deployment, and scaling. For example, a video streaming service may utilize microservices for user authentication, content delivery, and recommendation systems. Each microservice can be containerized and deployed separately, enhancing agility and resilience.

- Continuous Integration and Continuous Delivery (CI/CD): Containerization enhances the CI/CD pipeline, a fundamental DevOps practice aimed at automating software development and deployment. By providing consistent environments throughout development, testing, and production phases, containers help eliminate discrepancies that could lead to bugs or deployment issues. Developers can create and test applications within containers that replicate the production environment, resulting in quicker release cycles and improved software quality.

- Revitalizing Legacy Applications: Legacy systems can be modernized through the use of containerization. By encapsulating these applications within containers, organizations can separate them from newer technologies, allowing them to operate alongside contemporary containerized solutions. This method supports a gradual modernization approach, enabling legacy applications to function in harmony with cloud-native innovations.

- Internet of Things (IoT): Containerization enhances the deployment and management of applications on resource-limited IoT devices. It provides a lightweight, isolated environment for running applications, making it easier to package and distribute updates as container images. This process simplifies software updates across extensive networks of devices.

- Batch Processing: Containerization is particularly effective for managing batch processing tasks. It allows complex data workflows to be divided into smaller, containerized components that can be scaled and executed on demand. This approach facilitates the efficient processing of large datasets without the need for dedicated servers for each individual task.

- Scientific Computing: Containerization provides a consistent framework for packaging and deploying scientific applications across diverse computing environments. This ensures that researchers can execute their code reliably on various platforms, promoting reproducibility of results and accelerating the pace of scientific research.

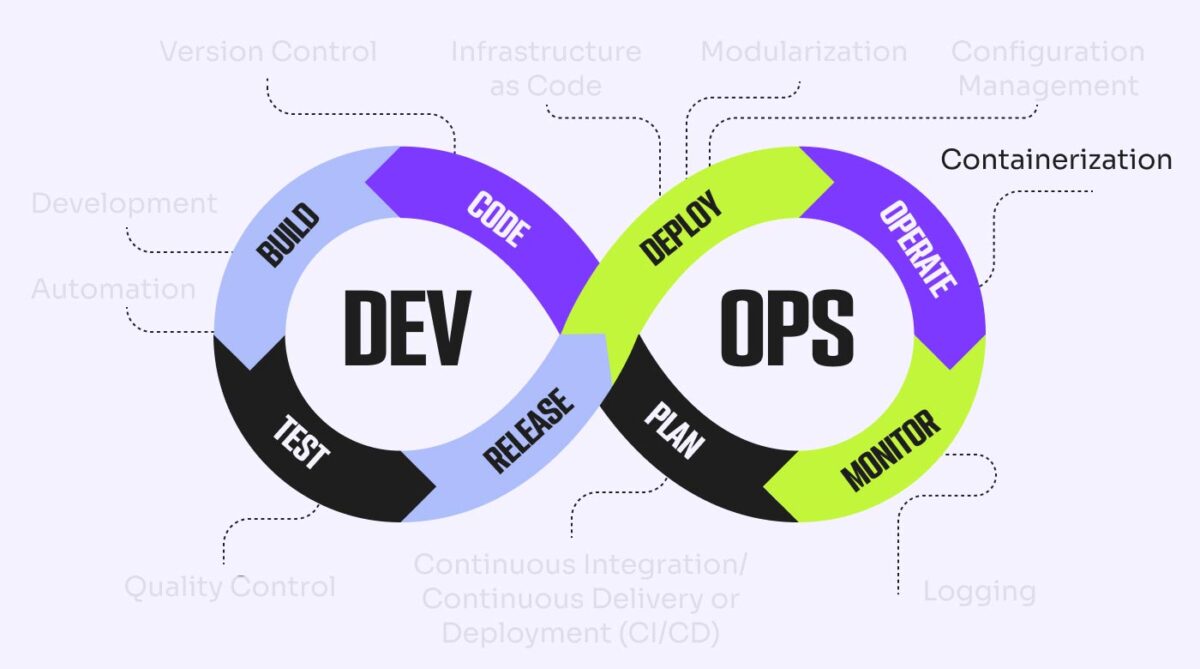

The role of containerization in DevOps

First, it is important to understand that DevOps represents a significant organizational shift that transforms how teams create and deliver value, often incorporating a software element, although it can also be applied solely to hardware. Containers offer a contemporary approach to software development, enhancing efficiency and scalability.

In summary, while containers are not essential for establishing a successful DevOps framework, they can align well with your organization’s specific needs and objectives.

This alignment occurs because containers can enhance the advantages of DevOps by improving the reliability of tests, facilitating the creation of developer environments that closely resemble production settings, and streamlining the deployment process.

The role of containerization within DevOps can be categorized into several key benefits:

- Enhanced reliability: Automated and repeatable processes guarantee that tests and security assessments are conducted consistently whenever code is committed, merged, or deployed. The shift towards a culture that eliminates silos among teams fosters a collective responsibility for quality. The uniformity of containers across different environments further boosts the reliability of testing.

- Accelerated delivery: The principles of continuous improvement, microservices architecture, and an automated DevOps pipeline ensure that modifications are easier to understand, quicker to develop, simpler to test, and result in fewer unintended effects. By compartmentalizing applications into distinct containers, DevOps professionals can concentrate on specific components of the solution without worrying excessively about the potential impacts of changes in one area on others.

- Enhanced collaboration: DevOps eliminates traditional role-based teams, encouraging individuals to unite in pursuit of shared product objectives. The portability of containers facilitates collaboration, allowing team members to work within the same application environment regardless of their chosen hardware. Utilizing a container registry—a centralized repository for containers—streamlines the process of publishing and locating containers within an organization.

Building containers into the DevOps workflow

Once a container is created, it is essential that it remains unchanged. Each deployment of a specific container version will yield consistent behavior, mirroring all previous deployments. However, changes are inevitable—so how can containers accommodate new packages that include security updates and additional features? To update a container, a new version must be constructed, and the previous version must be explicitly replaced wherever it is utilized. Even if the internal components of the new package have been modified, container maintainers strive to ensure that the container’s interaction with external systems remains consistent.

In a DevOps pipeline, this consistency ensures that tests conducted on containers within the CI/CD pipeline will perform identically to those in a production environment. This reliability enhances the testing process and minimizes the risk of code issues, bugs, and errors affecting end users.

What other functions do containers serve within the DevOps workflow?

- Code: Prior to writing any code, containers introduce a degree of standardization within the development environment. By defining the necessary package versions for an application, containers ensure uniform environments across different developers’ machines. This minimizes the likelihood of bugs arising from discrepancies in the environment.

- Build: In contrast to deploying directly onto a virtual machine or physical server, where the target must be operational and prepared, a container can be created once and stored for future deployment. This separation of the build process from the target environment means that builds are only required when there are changes to the container.

- Test: Containers enhance the concept of automated testing by allowing the entire environment to be evaluated, rather than just the code itself. This leads to improved software quality, as the testing environment closely resembles the production environment.

- Release and deploy: The consistency offered by containers means that modifying code in a production setting necessitates the creation and deployment of a new container. Consequently, containers are typically transient, influencing how organizations design their applications and aligning well with a microservices architecture.

- Operate: Containers mitigate the risks associated with deploying updated code or dependencies to a live application. Changes made within one container remain isolated. For instance, two microservices operating in separate containers can utilize different versions of the same JSON encoding/decoding library without the risk of one affecting the other.

How containers work in CI/CD

A CI/CD pipeline serves as the essential mechanism that propels the DevOps workflow. For optimal performance, it is crucial for a CI/CD pipeline to strike a balance between speed and thoroughness. If the process lacks speed, it may lead to backlogs, as code commits can outpace the pipeline’s ability to process them. Conversely, if thoroughness is compromised, confidence in the CI/CD pipeline may diminish as issues arise in production.

Containerization enhances both speed and thoroughness at critical stages of the CI/CD process.

- Integration: Utilizing containers allows for a more efficient integration process, as you do not need to rebuild everything from the ground up when implementing code changes into the main codebase. You can establish a foundational container that contains the necessary application dependencies and make adjustments during the integration stage.

- Testing: Containers can be rapidly created and dismantled as needed. This eliminates the requirement for manual upkeep of specific test environments or the delays associated with configuration scripts to set up an environment. Instead, containers can be automatically provisioned and deployed at scale, resulting in quicker test execution with minimal human involvement in environment setup.

- Release: After all tests are successfully completed, the build phase of a CI/CD pipeline generates a container image, which is then stored in a container registry. With the image in place, much of the typical workload associated with the release and deployment phases is already accomplished. Orchestration tools like Kubernetes manage the deployment locations of the containers and their interactions.

Microservices and containerization

A microservices architecture divides an application into smaller components, each responsible for a specific function. For instance, an online banking platform may include a microservice dedicated to retrieving real-time currency exchange rates, which it shares with other microservices via an internal API. Notably, the internal processes of the microservice do not need to be exposed; only the API is made public.

For numerous organizations, the integration of DevOps, microservices, and containers is essential. The DevOps principle of continuous enhancement aligns well with the targeted nature of microservices. Additionally, microservices are often designed to be stateless, meaning they do not retain data internally and instead depend on dedicated data services. This characteristic complements the ephemeral nature of containers, which can be easily deployed or removed without concerns about data persistence. In a microservices framework, there is a direct correlation between each microservice instance and a container. As demand increases, orchestration tools can be set up to launch additional containers for a specific microservice and decommission them when demand decreases.

Common containerization tools

To begin working with containers, it is essential to familiarize yourself with the various tools available in the container ecosystem. These tools can be classified into two main categories:

- Container platforms: These tools are responsible for creating and executing container images on a host operating system. Notable examples include Docker and LXD.

- Container orchestration: These tools facilitate the deployment, scaling, and management of containers that collaborate to support an application. Kubernetes is a widely used platform for container orchestration.

Now, let’s explore each category in greater detail.

Container platforms

The container platform comprises a collection of tools designed for the creation, execution, and distribution of containers. Docker is the most recognized among these, offering a comprehensive platform for container management. Additionally, the emergence of various open standards has led to alternative options, allowing users to select different tools tailored to specific stages of the process. For instance, Podman provides an alternative method for running containers, while Kraken serves as an open-source registry for container distribution. Regardless of whether you opt for a unified solution or a combination of various tools, you will require:

- Process container manifests: These configuration files detail the contents of the container, the required ports, and the necessary resources.

- Build images: These represent static containers that are prepared for deployment.

- Store and distribute images: Commonly referred to as a container registry, this serves as a central repository that can integrate with your CI/CD system for automation. It is also accessible for manual use by DevOps professionals.

- Run images: Establish and execute an isolated environment for the container. This process is relatively straightforward on Linux, while on Windows and macOS, it may necessitate a virtual machine to create and operate container images within a Linux environment.

Container orchestration

Larger microservices architectures can encompass thousands of microservices, each operating within one or more containers. The deployment, scaling, and management of interactions among such a vast number of containers cannot be handled manually. Instead, DevOps professionals establish parameters—such as the resource requirements for specific container groups and the necessary communication pathways between containers—but it is an orchestration platform that ensures these containers operate seamlessly together.

Numerous orchestration tools are available, each offering a unique approach. The most widely used is Kubernetes, which serves as the industry’s de facto standard for container orchestration. Originally developed by Google to manage the containers that supported its search engine, Kubernetes has several alternatives. On the open-source front, there is Red Hat’s OpenShift Container Platform, while the SaaS market features options like Azure’s Kubernetes Service.

5 Examples of Containerized Applications

Numerous companies are currently utilizing container technology, and their methods of implementation vary. The extensive range of containerized applications has reached a scale that could warrant its own congressional district. However, examining the approaches of several prominent early adopters reveals the transformative nature of this technology. Even more than five years after the initial surge of interest in containers, their adoption continues to expand significantly.

SPOTIFY

Spotify recognized the potential of container technology at an early stage. The audio streaming service started utilizing containers in 2013, coinciding with the emergence of Docker, and even created its own orchestration system known as Helios. According to Spotify software engineer Matt Brown, the company released Helios as open-source just a day before the announcement of Kubernetes, and shortly thereafter, Spotify began its transition to Kubernetes.

This transition significantly reduced the time required to deploy a new service from an hour to mere minutes or seconds, while also tripling CPU utilization efficiency, as noted by site reliability engineer James Wen in 2019. More recently, Spotify has developed and open-sourced Backstage, a developer portal that features a Kubernetes monitoring system.

THE NEW YORK TIMES

The New York Times, an early proponent of container technology, experienced a significant reduction in deployment times following its transition from traditional virtual machines to Docker. According to Tony Li, a staff engineer at the organization, what once required as much as 45 minutes was reduced to “a few seconds to a couple of minutes” by 2018, approximately two years after the Times shifted from private data centers to cloud infrastructure and embraced cloud-native technologies.

BUFFER

Various social media platforms utilize distinct image aspect ratios, necessitating that social scheduling tools like Buffer adjust images to ensure compatibility across the different channels associated with a user’s account.

In 2016, as Buffer increased its use of Docker for application deployment, the image resizing feature was among the first services to be fully developed using a contemporary container orchestration system. This approach to containerization enabled a level of continuous deployment that has since become essential in the DevOps landscape. Dan Farrelly, Buffer’s chief technology officer, remarked about a year after the migration began, “We were able to detect bugs and fix them, and get them deployed super fast. The second someone is fixing [a bug], it’s out the door.”

SQUARESPACE

Squarespace initiated its transition from virtual machines to containers in approximately 2016. Like many others during the virtual machine era, the website-hosting platform faced challenges related to computing resources. According to Kevin Lynch, a principal software engineer at Squarespace, developers often dedicated significant time to provisioning machines for new services or scaling existing ones. This transition enabled developers to deploy services independently of site reliability engineering, resulting in a reduction of deployment times by as much as 85 percent, Lynch noted.

GITLAB

GitLab has identified the adoption of Kubernetes as one of the most significant factors contributing to its long-term growth, alongside trends such as remote work and open source technologies. This is not surprising, considering that both GitLab and Kubernetes are fundamental components of the DevOps ecosystem. In 2020, Marin Jankovski, GitLab’s director of platform infrastructure, provided insights into how the shift from traditional virtual machines to containerization has transformed the company’s infrastructure in terms of size, performance, and deployment efficiency. Essentially, applications are now operating more efficiently on a reduced number of machines. Specifically, workloads are managed on three nodes instead of ten, processing capabilities have increased threefold, and deployment times have improved by 50%, according to Jankovski.

Conclusion

Containerization has revolutionized DevOps workflows, fundamentally altering how applications are developed, deployed, and managed. By encapsulating applications along with their dependencies in lightweight, portable containers, DevOps teams empower organizations to enhance speed, scalability, and reliability in their delivery processes.

The advantages of containers are numerous, including portability, scalability, isolation, resource efficiency, consistency, and rapid deployment. These characteristics make containers an essential tool in contemporary DevOps practices.

By implementing best practices and leveraging container platforms such as Docker, Kubernetes, and cloud-managed services, organizations can maximize the advantages of containerization, enabling them to accelerate the delivery of innovative solutions and foster growth in a competitive marketplace.