DevOps aims to accelerate development, ensure regular testing, and facilitate more frequent releases, all while enhancing quality and reducing costs. To support these objectives, DevOps monitoring tools offer automation and enhanced measurement and visibility across the entire development lifecycle, encompassing planning, development, integration, testing, deployment, and operations.

The contemporary software development lifecycle is quicker than ever, with various development and testing phases occurring concurrently. This evolution has led to the emergence of DevOps, which transforms isolated teams responsible for development, testing, and operations into a cohesive unit that handles all functions and adopts the principle of “you build it, you run it” (YBIYRI).

Given the prevalence of frequent code changes, development teams require DevOps monitoring to gain a thorough and real-time perspective of the production environment.

What Is DevOps Monitoring?

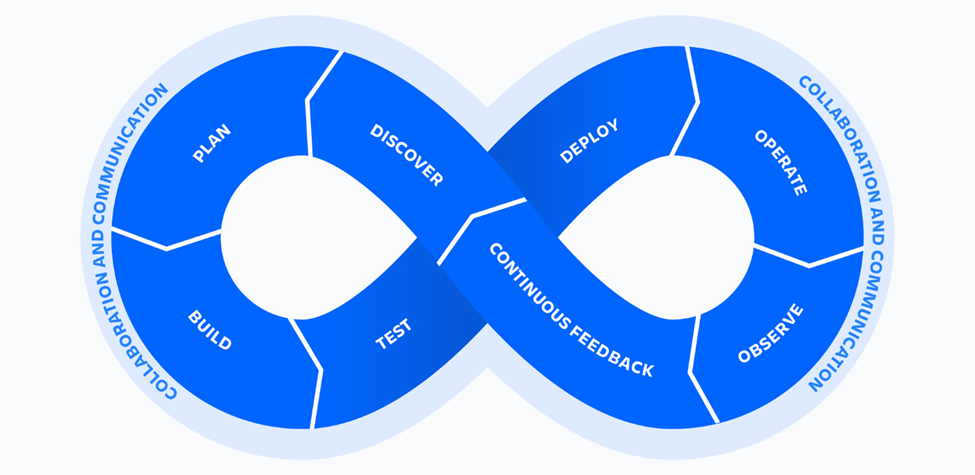

DevOps monitoring encompasses the comprehensive oversight of the development lifecycle, which includes planning, development, integration, testing, deployment, and operational phases. It provides a holistic and real-time perspective on the performance of applications, services, and infrastructure within the production environment. Essential features such as real-time data streaming, historical analysis, and visual representations play a vital role in monitoring applications and services. Engineers often use the terms Continuous Monitoring (CM) and Continuous Control Monitoring (CCM) to describe this process, yet the fundamental concept remains unchanged.

In DevOps, the process follows a continuous pipeline. This pipeline consists of planning, developing, integrating, testing, deploying, and operating phases.

Practicing DevOps can be quite involving, but the benefits justify the effort.

Some benefits of DevOps monitoring include:

- Define, track, and measure actual key performance indicators across all aspects of DevOps.

- Increase the observability of various components of your DevOps stack so you can identify when they degrade in performance, security, cost, or other aspects.

- Detect and report anomalies to the relevant teams quickly so they can resolve issues before they affect the user experience.

- Analyze logs and metrics to uncover root causes as quickly as possible. Tracking logs and metrics can help pinpoint where an issue started or occurred. As a result, your Mean Time To Detection (MTTD), Mean Time To Isolate (MTTI), Mean Time To Repair (MTTR), and Mean Time To Recovery (MTTR) can improve.

- Respond to threats on-call or automatically using a variety of tools.

- Find opportunities for automation throughout the DevOps process that will improve engineers’ DevOps toolchains and efficiency.

- Identify patterns in system behavior that a DevOps engineer should be on the lookout for in the future.

- Create a continuous feedback loop that improves collaboration among engineers, users (internal and external), and the rest of the organization.

By overseeing DevOps operations, an organization can ensure excellent customer experiences while simultaneously lowering expenses across the DevOps lifecycle.

Why DevOps Monitoring?

To enhance aspects such as load balancing and security, or to develop process tools for rollback protocols and self-healing infrastructure, effective monitoring is crucial for gaining insights into your applications and infrastructure. DevOps monitoring provides a comprehensive, user-friendly solution that streamlines visibility, thereby enhancing both the software delivery process and the quality of the final product.

DevOps monitoring plays a vital role in maintaining the performance and security of IT systems. However, its importance extends beyond these functions. Here are additional reasons why DevOps monitoring is indispensable:

Improved performance tracking

DevOps monitoring allows teams to consistently and attentively oversee the performance of both applications and infrastructure. This ensures that any issues are quickly detected and resolved. Additionally, it provides ongoing assurance of improved system efficiency and user satisfaction.

Cost optimization

DevOps tools facilitate the monitoring of resource utilization and identify opportunities for cost savings. By analyzing the effects of different components and processes, organizations can reduce expenses and enhance their financial management.

Proactive anomaly detection

DevOps teams have the capability to detect anomalies at an early stage by monitoring critical metrics and logs, thereby preventing any negative impact on end users. By implementing proactive strategies, they can minimize downtime and enhance the reliability of applications.

Enhanced collaboration

Ongoing monitoring fosters cooperation between development and operations teams. By exchanging insights and data, these teams can address challenges and improve efficiency. This leads to a more streamlined and productive workflow.

Continuous improvement

Monitoring in DevOps provides essential insights that can optimize DevOps practices. By examining performance trends, teams can enhance both the efficiency and effectiveness of their operations.

Quick troubleshooting

Continuous monitoring enables teams to quickly detect problems, thereby accelerating the resolution process. This approach also minimizes the duration required to return to normal operations.

Better decision-making

Insights derived from monitoring tools enable informed decision-making. Teams evaluate this data to identify priority tasks and allocate resources efficiently.

Improved user experience

By maintaining regular oversight, DevOps teams can provide a seamless and positive experience for end users. This involves guaranteeing that services maintain optimal response times, high availability, and dependable performance.

Security and compliance

Monitoring in DevOps encompasses the assessment of security metrics and the evaluation of compliance with industry standards. This process aids in identifying vulnerabilities and ensuring a secure IT environment. Additionally, it plays a crucial role in protecting sensitive information and preserving user confidence.

DevOps monitoring vs. observability

When viewing the left side of the infinity loop as the product side and the right side as the operational side, the product manager responsible for launching a new feature into production focuses on how the project is divided into tasks and user stories. The developer on the left side must understand the process of deploying the feature, which includes managing project tickets, user stories, and dependencies. Additionally, if developers follow the DevOps principle of “you build it, you run it,” they will also be concerned with addressing incidents.

Transitioning to the operational aspect of the life cycle, a site reliability engineer must grasp the services that are subject to measurement and monitoring, enabling prompt resolution of any issues that arise. Without a cohesive DevOps toolchain that integrates these processes, the environment can become disorganized and chaotic. Conversely, a well-integrated toolchain provides enhanced insight into ongoing activities.

Types Of Monitoring in DevOps: What Should You Monitor?

DevOps monitoring plays a crucial role in automating processes within the software development lifecycle. Its primary objective is to enhance the transparency, performance, and overall user experience of IT environments. Various DevOps monitoring strategies exist to oversee hardware and software components, storage solutions, servers, and more. These include:

- Infrastructure monitoring

- Application Performance Monitoring (APM)

- Network monitoring

- Log Monitoring

- Security Monitoring

- Synthetic Monitoring

- Cost monitoring

Infrastructure monitoring

Purpose: Infrastructure monitoring is dedicated to overseeing the health and performance of the essential hardware resources that underpin applications, such as servers, storage systems, network devices, and data centers. This monitoring approach guarantees that both physical and virtual components function efficiently, allowing for the early detection of potential problems before they affect application performance.

Key Areas:

- Servers: Monitoring CPU usage, memory utilization, disk I/O, and system uptime.

- Network Devices: Monitoring routers, switches, and other networking components for latency, packet loss, and bandwidth usage.

- Storage: Monitoring disk space, read/write speeds, and performance of databases.

Key Metrics:

- CPU Usage: High CPU usage can indicate an overloaded server or application that needs optimization.

- Memory Usage: Monitoring memory usage helps in identifying memory leaks or insufficient resources.

- Disk I/O: Disk input/output monitoring ensures that storage systems are not overburdened, which can slow down applications.

- Network Latency: This measures the time taken for data to travel from one point to another, which is critical in ensuring smooth communication between systems.

There are two types of infrastructure monitoring:

- In agent-based infrastructure monitoring, engineers install an agent (software) on each of their hosts, either physical or virtual. The agent collects infrastructure metrics and sends them to a monitoring tool for analysis and visualization.

- Agentless infrastructure monitoring doesn’t involve installing an agent. Instead, it uses built-in protocols such as SSH, NetFlow, SNMP, and WMI to relay infrastructure component metrics to monitoring tools.

There are advantages and disadvantages to each approach. Monitoring using agents, for example, collects more detailed information because it is tailored to the device or components you wish to monitor.

Conversely, migrating to a different platform may lead to compatibility issues with the agent, potentially resulting in data loss. Additionally, agents can consume significant resources on your servers, leading to increased latency or additional expenses.

Various infrastructure elements, such as virtual machines (including Hyper-V and VMware), servers, networking equipment, storage solutions, and flow devices, offer integrated agentless monitoring features. Furthermore, you can oversee the monitoring of these components from a centralized location. By integrating both methods, you can develop a robust monitoring strategy.

An effective Infrastructure monitoring tool must be able to perform the following responsibilities:

- Infrastructure monitoring tools for DevOps should observe and monitor a server’s availability.

- Infrastructure monitoring tools should be able to track CPU and disk usage to analyze issues hampering the performance of the setup.

- Infrastructure monitoring tools must display how reliable and resilient a system is by tracking and monitoring its runtime.

- An infrastructure monitoring tool must be able to track the response time of a system if and when an error occurs.

DevOps Tools for Infrastructure Monitoring: Nagios, Prometheus, Zabbix.

Application (APM) monitoring

Purpose: APM is dedicated to monitoring the performance and availability of software applications to confirm they operate as intended. This oversight is crucial for detecting and addressing problems associated with application performance, user experience, and overall availability.

Key Areas:

- User Experience: Monitoring user interactions with the application to ensure they are having a smooth and error-free experience.

- Transaction Tracing: Tracking the flow of transactions across various components of the application to identify bottlenecks.

- Application Dependencies: Monitoring third-party services or APIs that the application depends on to ensure they are functioning correctly.

Application performance monitoring tools mainly track metrics such as,

- Response Time: Measures the time taken for an application to respond to a user request.

- Error Rates: Tracks the number of errors occurring within the application, which can indicate issues in the code or underlying infrastructure.

- Throughput: The number of transactions or requests processed by the application within a certain timeframe.

- Application Availability: Ensures that the application is available and accessible to users.

Application performance monitoring enables teams to detect and address problems proactively, preventing them from impacting application performance. Tools for application monitoring generally assess API responses, transaction duration and volume, system responses, and the overall health of the application.

An ideal Application monitoring platform should be able to:

- Offer native integration with tools being used in the development workflow.

- Provide access to real-time data for faster detection and removal of issues & bottlenecks.

- Improve & secure internal data communications and build robust security controls.

- Generate viewer-friendly reports and dashboards.

- Deliver historical trends of events and their correlation to identify hidden risks.

DevOps Tools for Application Monitoring: Datadog, Splunk, AppDynamics, New Relic, Dynatrace.

Network monitoring

Purpose: Network monitoring involves overseeing the performance and availability of various network elements, including routers, switches, firewalls, and connections. This process is essential for maintaining a dependable network infrastructure that meets the communication requirements of applications.

Network monitoring tools are essential for detecting performance issues within a network by evaluating its key components. This process involves the analysis and tracking of vital metrics.

- Latency: Measures the delay in data transfer across the network, critical for applications requiring real-time communication.

- Packet Loss: Indicates the percentage of data packets that do not reach their destination, which can lead to degraded application performance.

- Network Throughput: The amount of data successfully transferred from one point to another within a network in a given time period.

- Device Availability: Monitors whether network devices like routers and switches are online and performing as expected.

These metrics are conducive to measuring and correcting network issues.

Network monitoring involves five key processes: discovery, delineation, detection, observation, and reporting. Through these processes, network monitoring enables IT systems to proactively identify issues. Furthermore, it contributes to enhancing the performance and availability of the monitored components.

Key Areas:

- Network Latency: Monitoring the time it takes for data to travel between two points in the network.

- Bandwidth Usage: Tracking how much data is being transmitted over the network, which helps in identifying congestion or potential bandwidth shortages.

- Packet Loss: Monitoring the percentage of data packets that are lost during transmission, which can affect application performance.

- Uptime/Downtime: Ensuring that network devices are online and functioning as expected.

DevOps Tools for Network Monitoring: Wireshark, Nmap (Network Mapper), SolarWinds Network Performance Monitor, Nagios.

Log Monitoring

Purpose: Log monitoring encompasses the examination and oversight of log files produced by applications and infrastructure. These logs offer comprehensive documentation of events, errors, transactions, and user interactions, rendering them an essential tool for both troubleshooting and security oversight.

Key Areas:

- Error Logs: Monitoring error logs helps in quickly identifying and addressing issues within the application or system.

- Access Logs: These logs track who is accessing the system and from where, which is useful for security audits and user behavior analysis.

- Event Logs: Captures significant system events like configuration changes, failures, and security breaches.

Key Metrics:

- Log Volume: Monitoring the volume of logs helps in identifying unusual activity, such as a sudden spike in errors or access attempts.

- Error Frequency: Frequent errors in logs could indicate recurring issues that need attention.

- Log Anomalies: Unusual patterns in logs, such as unexpected login attempts or abnormal system events, can be indicative of security breaches or system malfunctions.

Tools: ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, Graylog.

Security Monitoring

Purpose: Security monitoring encompasses the observation and evaluation of security-related activities across applications, networks, and infrastructure. This process is essential for recognizing potential threats, identifying breaches, and maintaining adherence to security policies.

Key Areas:

- Intrusion Detection: Monitoring for unauthorized access or suspicious activity within the system.

- Vulnerability Scanning: Identifying and tracking vulnerabilities within the system that could be exploited by attackers.

- User Activity Monitoring: Keeping an eye on user actions to ensure they comply with security policies and to detect any potential insider threats.

Key Metrics:

- Security Incidents: The number and type of security breaches or incidents detected.

- User Access Logs: Monitoring who accessed what resources and when, crucial for audit trails and detecting unauthorized access.

- Vulnerability Scores: Metrics that indicate the severity and impact of vulnerabilities found within the system.

Tools: Snort, Splunk, OSSEC.

Synthetic Monitoring

Purpose: Synthetic monitoring simulates user interactions with applications or websites to evaluate their performance and availability from an external perspective. This proactive strategy allows teams to identify and resolve issues before they affect real users.

Key Areas:

- Transaction Testing: Simulating transactions to ensure they are functioning as expected, such as login processes or payment gateways.

- Page Load Times: Measuring how long it takes for a webpage to load under simulated conditions.

- API Testing: Simulating API calls to ensure they respond correctly and within an acceptable timeframe.

Key Metrics:

- Response Times: Measures how quickly the application or website responds to simulated user interactions.

- Error Rates: Identifies the frequency and type of errors encountered during simulated tests.

- Availability: Tracks whether the application or website is up and running from different geographic locations.

Tools: Pingdom, New Relic Synthetic Monitoring, Catchpoint.

Cost monitoring

Keeping track of expenses throughout the DevOps pipeline is essential to avoid exceeding budget limits. This involves evaluating resource usage.

Alongside real-time analytics, advanced cost management solutions can deliver accurate cost information on a per-unit and per-customer or project basis, enhancing collaboration with engineering and finance departments for required follow-up actions.

This capability enables a thorough insight into your Cost Of Goods Sold (COGS), gross margins, and resource usage at each phase of the DevOps workflow.

Various monitoring approaches are essential components of a DevOps strategy, each targeting specific aspects of system performance, reliability, and security. By effectively implementing these monitoring techniques, DevOps teams can ensure the smooth operation of their applications and infrastructure, proactively identify and resolve issues, and improve the overall user experience.

Metrics To Watch with Your DevOps Monitoring Tools

In DevOps, monitoring involves tracking critical metrics to ensure optimal system performance, reliability, and security. Below are several important metrics to keep an eye on:

CPU usage

Tracking CPU usage is crucial as it provides important insights into the processing power utilized by your applications. High CPU usage may signal performance problems that need immediate intervention. On the other hand, low usage might indicate that resources are not being fully utilized, presenting chances for optimization.

Memory usage

Monitoring memory usage is not just a routine responsibility; it is an essential duty. This process is key to detecting memory leaks and ensuring that applications have sufficient resources for optimal performance. Excessive memory usage can lead to diminished performance and potential system failures.

Log data

Analyzing log data is essential for gaining insights into the performance of applications and systems. Through log monitoring, it becomes possible to detect trends, resolve issues, and maintain adherence to security protocols.

Network traffic

Monitoring network traffic is crucial for understanding the data movement within your infrastructure. This approach helps identify bandwidth problems and possible network threats. Furthermore, it improves the effectiveness of data transfer processes.

Disk input/output (Disk I/O)

Disk I/O metrics offer valuable information regarding the read and write operations of your storage devices. Keeping track of these metrics can help identify performance problems related to storage, including issues with latency and throughput.

Service latency

Tracking service latency helps identify communication delays in your application. Elevated latency can negatively impact overall performance and user satisfaction.

Error rates

Tracking error rates is essential for pinpointing issues in your workflow. A notable rise in errors may suggest deployment issues or configuration mistakes.

Resource use

Tracking resource utilization allows for a better understanding of how effectively your infrastructure allocates its resources. This process includes observing the usage of your system’s CPU, memory, disk space, and network bandwidth.

Response times

Monitoring response times is essential for ensuring that your applications achieve the expected performance standards. Slow response times can lead to unsatisfactory user experiences and may reveal underlying problems that require prompt resolution.

Deployment frequency

Tracking the rate of new code deployments can provide insightful data regarding the efficiency of your CI/CD pipeline. A high frequency of deployments often suggests a robust DevOps methodology, while a lower frequency may highlight potential challenges within the development cycle.

Uptime and availability

Ensuring high availability and reducing downtime are essential for maintaining user satisfaction. By tracking uptime and availability metrics, organizations can enhance service reliability and pinpoint opportunities for improvement.

Transaction volumes

Keeping track of the transaction volume processed by your application enables you to analyze usage patterns and assess scaling requirements. Additionally, it assists in pinpointing performance issues that may arise during peak demand periods.

What Factors to Consider When Choosing a DevOps Monitoring Tool

Here’s a quick checklist of some of the crucial considerations to make when selecting monitoring tools for DevOps teams.

- All-in-one observability – Rather than having separate views that often lead to visibility gaps, you’ll want a solution that lets you view most components together.

- User-friendly interface – Select a monitoring tool with customizable dashboards and interactive features to help you tailor the information to your requirements.

- Automatic discovery – This automates the process of searching for and identifying IT assets in a network. It saves time and effort. Many monitoring tools offer this, but not all of them do.

- Real-time metrics, events, and distributed traces – Real-time analytics uses monitoring data as it becomes available, enabling you to detect errors before they become costly problems.

- Root cause analysis – This approach enables you to identify and fix the root cause instead of simply settling symptoms for a while before they return.

- Data retention periods – Most DevOps monitoring tools have specific data retention limits. A longer retention period will cost more, but it can help you identify system health patterns, especially when problems occur occasionally.

- AIOps and Machine Learning – Some DevOps monitoring tools utilize AI technologies to enhance searching, answering, and resolving technical issues, reducing time and improving productivity.

- Resource usage and related cost monitoring – Cost is now a first-class metric for most organizations, as 32% of cloud budgets are wasted. Yet, most cost tools are clunky, inexact, and mostly deliver total and average cost — not specifics such as Cost per Customer, Cost per Deployment, or Cost per Request.

With these factors in mind, here are some of the best DevOps monitoring tools to support continuous improvement.

Top DevOps Monitoring Tools by Category

DevOps tools offer several benefits:

- A DevOps tool automates repetitive tasks. You can use this to free your engineers up so they can focus on only the most critical tasks, such as patching security threats or releasing advanced features more quickly to boost your organization’s competitiveness.

- Reduce human error to release reliable code more quickly.

- Improve the software development process using Continuous Integration and Continuous Development (CI/CD).

- Combining these DevOps benefits optimizes costs.

Here are some of the top monitoring tools you can use, organized into several DevOps categories.

Open-source DevOps monitoring tools

For those with limited financial resources or seeking customizable continuous monitoring solutions, open-source software can be advantageous. Below are four examples:

1. Nagios

Nagios is an innovative DevOps monitoring solution that provides comprehensive monitoring for servers, applications, and networks. It is capable of tracking any device that has an IP address and can oversee various server services such as POP, SMTP, IMAP, HTTP, and Proxy on both Linux and Windows platforms. Additionally, it facilitates application monitoring, covering aspects like CPU usage, swap space, memory, and load metrics.

Available for free download, Nagios features an intuitive web interface and supports more than 5,000 integrations for server monitoring. The open-source version, known as Nagios Core, is available at no cost, while the commercial version, Nagios XI, extends its monitoring capabilities to include infrastructure, applications, networking, services, log files, SNMP, and operating systems.

2. Prometheus

Prometheus is available for download and includes a variety of monitoring tools that are beneficial in a DevOps environment. These tools feature alerting capabilities, the ability to store time series data on local disks or in memory, and graphical data visualization through Grafana. Additionally, it offers extensive support for various integrations, libraries, and types of metrics.

3. Zabbix

Zabbix is a leading alternative to Nagios, providing real-time monitoring for network traffic, services, applications, cloud environments, and servers. It can be deployed both on-premises and in the cloud. The latest version, Zabbix 5.4, introduces enhancements in distributed monitoring, high availability, and a wide range of monitoring metrics, enabling you to expand your monitoring capabilities in the dynamic landscape of DevOps.

4. Monit

For those seeking a compact monitoring solution for Unix systems, Monit is an excellent choice. It allows you to monitor daemon processes, particularly those initiated during system boot from /etc/init/, including services like Apache, sshd, SendMail, and MySQL. Monit provides capabilities for error detection and alert notifications, in addition to monitoring filesystems, directories, and files on the local machine. Furthermore, it can be utilized to oversee cloud environments, hosts, and systems, encompassing various internet protocols (such as HTTP and SMTP), along with tracking CPU and memory usage, as well as load averages.

All-in-One DevOps monitoring tools

Several platforms provide extensive DevOps observability and monitoring features. By consolidating various monitoring requirements into a single solution, these unified sources of truth reduce expensive visibility gaps, simplify processes, and eliminate unnecessary waste.

5. Datadog

DevOps teams are increasingly drawn to Datadog due to its comprehensive observability capabilities and effective root cause analysis features. The platform enables users to gather, evaluate, and disseminate system monitoring information across various environments, including on-premises setups and diverse cloud infrastructures such as private, public, hybrid, and multi-cloud systems.

This versatility positions Datadog as a strong candidate for enterprise-level applications. Built on a Go architecture, the Cloud Monitoring as a Service platform seamlessly integrates with major cloud providers like AWS, Azure, and GCP. Additionally, for those interested in monitoring digital experiences, Datadog is compatible with a wide range of devices and operating systems, and it also offers a cloud cost management feature.

However, some Datadog users have expressed concerns regarding the high costs associated with the service, prompting the consideration of several more affordable and capable alternatives.

6. New Relic

New Relic stands out as a favored alternative to Datadog for various reasons. It provides comprehensive error tracking, precise anomaly detection, and sophisticated monitoring for containers, including Kubernetes. Additionally, New Relic enables real-time code debugging without the need for sampling.

In essence, New Relic serves as a complete observability platform, encompassing everything from infrastructure and application performance monitoring (APM) to serverless and real user monitoring (RUM). Furthermore, its foundation on OpenTelemetry ensures compatibility with most tools and integrations within your current or future technology stack.

To utilize New Relic, you must install the New Relic agent on your servers or any devices you wish to monitor. However, it is worth noting that New Relic does not prioritize security and compliance monitoring as much as some other alternatives.

7. Dynatrace

Beyond providing comprehensive observability for DevOps, Dynatrace places a strong emphasis on IT security through a suite of DevSecOps tools. The platform employs intelligent automation to safeguard cloud-native applications during runtime, effectively integrating security measures into your CI/CD pipelines.

With features like Runtime Vulnerability Analytics and Application Protection, Dynatrace helps reduce the risk of zero-day vulnerabilities. The DevOps team is empowered to continuously detect, assess, and mitigate application threats, such as command and SQL injection attacks. Furthermore, Dynatrace delivers enterprise-grade monitoring of business KPIs, a platform for bespoke application development, and real-time contextual topology mapping.

However, users may need to invest time in learning and optimizing the platform, and its pricing structure can be intricate.

8. LogicMonitor

LogicMonitor serves as an agentless, comprehensive observability platform tailored for DevOps applications. Like other leading monitoring solutions, it delivers real-time insights across various domains, including infrastructure and website performance. This cloud-based platform can be deployed within minutes, effortlessly scales to meet demand, and accommodates hybrid observability, integrating both cloud and on-premises environments.

Additionally, it offers in-depth monitoring capabilities for cloud-based, hyper-converged, and traditional storage systems. For organizations prioritizing the security of their remote work strategies, LogicMonitor’s remote and Software-Defined Network Monitoring (SD-WAN) features present a viable option.

Furthermore, LogicMonitor ensures data security during transmission and storage, implements robust role-based access control (RBAC), and utilizes TLS encryption for enhanced protection.

Application, network, and infrastructure monitoring tools

The following tools offer a nearly “all-in-one” solution for continuous monitoring.

9. Sensu by Sumo Logic

Sensu’s monitoring as code approach offers a comprehensive suite of features, including health checks, incident management, self-healing capabilities, alerting, and open-source observability across various environments. Users can define monitoring workflows through declarative configuration files, facilitating easy sharing among engineering teams.

This code-like treatment allows for thorough review, editing, and version control. Additionally, Sensu Go is designed for scalability and seamlessly integrates with other DevOps monitoring tools such as Splunk, PagerDuty, ServiceNow, and Elasticsearch.

10. Splunk

Splunk offers continuous monitoring capabilities that enable organizations to oversee the complete application lifecycle. It delivers real-time monitoring of infrastructure, along with analytics and troubleshooting functionalities for on-premises, multi-cloud, and hybrid settings. The platform also features real-time alerts, comprehensive visibility across the stack, Kubernetes monitoring, visualization tools, scaling options, and automation of monitoring processes, all consolidated in a single interface.

Additionally, Splunk’s vibrant online community, comprising more than 13,000 active users and over 200 integrations, serves as an excellent resource for support and customization.

11. ChaosSearch

For those who prefer utilizing Amazon S3 or Google Cloud Storage buckets for backend storage, ChaosSearch simplifies the process of collecting, aggregating, summarizing, and analyzing metrics and logs. The platform allows you to establish triggers and alerts, ensuring that engineers receive prompt notifications regarding anomalies while monitoring various infrastructure components such as servers, load balancers, and services.

In addition, it provides monitoring capabilities for Kubernetes and Docker containers. Beyond its support for storage-based isolation on Amazon S3, ChaosSearch also offers SSO and RBAC for enhanced data protection.

12. Sematext

Sematext offers a comprehensive monitoring solution tailored for DevOps teams, allowing them to oversee back-end and front-end logs, performance metrics, APIs, and the overall health of their computing environments.

Additionally, it facilitates the monitoring of real users, devices, networks, containers, microservices, and databases. Users can also configure log management, synthetic monitoring, and set up triggers and alerts. With Sematext’s dashboards, users can effectively visualize data and extract actionable insights.

13. Elastic Stack (ELK)

Engineers can efficiently store, search, and analyze data from various sources using the Elastic Stack. The applications of ELK encompass logs, SIEM, endpoints, metrics, uptime, and APM, along with security monitoring. ELK stands for Elasticsearch, Logstash, and Kibana, which are its three fundamental components.

Elasticsearch enables the ingestion of data from any source and in any format, which is then processed by Logstash on the server side.

Meanwhile, Kibana is responsible for visualizing and sharing the processed and stored data. Notable alternatives include LogicMonitor, New Relic, Dynatrace, DataDog, Sumo Logic, and BMC Helix Operations Management.

Data aggregation and cross-domain enrichment tool

This category encompasses a new wave of AIOps tools that utilize artificial intelligence and machine learning methodologies to enhance telemetry data. AIOps tools facilitate the detection of problems within your enterprise system by autonomously gathering, analyzing, and reporting extensive data from various sources.

14. Big Panda

BigPanda’s event correlation algorithms streamline the aggregation, enrichment, and correlation of alerts across diverse infrastructures, cloud environments, and applications. This process minimizes alert noise by consolidating multiple alerts into a single, comprehensive incident. Additionally, it facilitates the distribution of alerts through established channels, including ticketing systems, collaboration tools, and reporting mechanisms.

15. Planview Hub (Formerly Tasktop Integration Hub)

The Planview Hub empowers DevOps teams to unify multiple essential software delivery tools, eliminating the need to manage them individually. This is particularly beneficial for those who prefer to consolidate everything, including Git and code quality assurance processes, within a single platform.

The Planview Hub features numerous pre-built connectors that facilitate quick and straightforward no-code integrations. This functionality supports a diverse array of integrations, automating workflows from popular Git repositories to platforms such as Jira, Azure DevOps, ServiceNow, and Jama.

In contrast to traditional point-to-point integrations, the Hub employs model-based integration, which allows for quicker setup, reduced mapping requirements, and less time dedicated to maintaining integrations.

16. Librato (Now part of SolarWinds AppOptics)

Since its launch, Librato has been engineered to facilitate scalable and redundant monitoring of time-series data. The initial step involves transmitting data to Librato through one of the numerous ready-to-use integrations, or alternatively, you can POST directly to your RESTful API.

By developing dashboards, you can visualize your metrics through charts and filter your data to pinpoint specific issues. Additionally, you can configure alerts for critical metrics to ensure you remain informed about the health of your services. Notifications can be sent via email, chat, or your preferred escalation service.

Librato is compatible with Rails 3.x or Rack, as well as JVM-based applications and various other programming languages. Beyond creating custom charts and workspaces, users can also incorporate one-time events and establish threshold-based alerts.

Collaboration is enhanced through multi-user access, PNG chart snapshots, private Spaces links, and integrations with platforms such as Slack, HipChat, and PagerDuty.

Business service health monitoring tools

Historically, DevOps teams have prioritized engineering aspects over the effects of their activities on financial outcomes. Nevertheless, there is a growing trend among DevOps teams to concentrate on monitoring websites, mobile applications, end users, and real user metrics (RUM), driven by Engineering-Led Optimization. Below are several tools that can assist in enhancing your optimization efforts.

17. Akamai mPulse

mPulse provides real-time insights derived from actual user data, allowing you to correlate user behavior with your business performance. It gathers comprehensive information across 200 business and performance metrics directly from users’ browsers. This data is analyzed to pinpoint the underlying causes of latency issues and revenue declines across all page views.

You can expect to gather performance metrics, including bandwidth usage and page load times, as well as business indicators such as total orders, bounce rates, and conversion rates.

Real-time reports on user activity are available immediately upon the arrival of the first mPulse beacon. The mPulse dashboard features widgets that display detailed breakdowns by various segments, including bandwidth distribution, page categories, geographic locations, and browser types.

If desired, your organization has the option to create customized dashboards to arrange the data in a manner that best suits its needs.

18. Sumo Logic

Sumo Logic is primarily recognized for its Software as a Service (SaaS) offerings in log analytics, business operations, and security monitoring, all enhanced by artificial intelligence and machine learning technologies. The platform provides out-of-the-box dashboards and AI-driven troubleshooting capabilities, enabling users to visualize, analyze, and respond to activities across their business processes comprehensively.

Furthermore, Sumo Logic includes Cloud Security Information and Event Management (SIEM) and Security Orchestration, Automation, and Response (SOAR) solutions to safeguard your data and workloads. Given that IBM estimates the cost of a single data breach can reach up to $4.5 million, Sumo Logic’s focus on cloud security is advantageous for organizations of all sizes.

In essence, Sumo Logic serves as a comprehensive observability platform that includes features for capacity planning and forecasting. It also demonstrates superior scalability compared to some competing solutions. By aggregating data from your infrastructure, applications, business operations, and end-users into a unified platform, you gain a holistic view of your IT environment. However, it is important to note that Sumo Logic is not intended for on-premises deployments, unlike alternatives such as Datadog and Sematext.

19. BMC Helix Operations Management

The Helix Operations Management tool was developed to enhance visibility in hybrid cloud environments. It integrates automation, AIOps, interactive dashboards, log analytics, event management, and intuitive workflows into a single platform.

BMC Helix Operations Management excels in providing insights into real-time operations of your containerized applications. By monitoring these activities, you can gain insights into how technical challenges impact customer satisfaction and, consequently, your financial performance.

Furthermore, the tool offers capabilities such as root cause analysis and predictive analytics, enabling you to address these issues effectively and safeguard your business interests.

DevOps Source code control tools

DevOps is defined by the collaboration of various teams who work on code concurrently, enabling swift and regular updates to applications. This ongoing enhancement leads to numerous modifications in the codebase. It is essential for teams to guarantee that all engineers are utilizing the same version of the source code. Source code management tools facilitate this process.

20. Git (GitHub, GitLab, and BitBucket)

Numerous DevOps teams utilize Git as their preferred source code management system. Its local branching architecture, diverse workflows, and staging capabilities contribute to its popularity compared to alternatives like Mercurial, CVS, Helix Core, and Subversion.

While Git is installed locally, GitHub facilitates remote collaboration and distributed source code management in the cloud. Additionally, both Bitbucket and GitLab are well-suited for enterprise applications.

Monitoring CI/CD pipelines and configurations

Jenkins, RedHat Ansible, Bamboo, Chef, Puppet, and CircleCI represent some of the leading CI/CD tools available today. By monitoring the CI/CD pipelines associated with these tools, you can enhance visibility across all environments, including development, testing, and production.

21. AppDynamics

Numerous tools and techniques exist for achieving code-level visibility. For instance, integrating Jenkins with Prometheus for data ingestion and storage, alongside Grafana for visualization, is a viable option. Alternatively, you might consider a comprehensive continuous monitoring solution for your CI/CD pipeline, such as AppDynamics or Splunk.

AppDynamics delivers real-time telemetry for both customer and business metrics, allowing you to oversee infrastructure, services, networks, and applications across multiple cloud environments. Additionally, it offers insights into Kubernetes, Docker, and Evolven, along with root-cause diagnostics, a pay-per-use pricing structure, and hybrid monitoring capabilities.

Test server monitoring

A test monitor assesses a current test and offers constructive feedback. Furthermore, monitoring and controlling test progress encompass various techniques and elements that guarantee the test achieves defined benchmarks throughout each phase. Selenium serves as a prime example of a tool used for monitoring test progress.

22. Selenium

Selenium is a widely-used open-source framework designed for automating web applications for testing purposes. However, its capabilities extend beyond basic testing. By utilizing Selenium WebDriver, you can effectively automate regression tests and suites, enabling scalable and distributed browser-based regression testing across various environments.

Selenium Grid acts as a centralized hub, allowing you to execute tests on multiple machines, operating systems, browsers, and environments simultaneously. Additionally, Selenium IDE is an add-on available for Firefox, Chrome, and Edge that facilitates straightforward recording and playback of browser interactions.

Alternatives to Selenium include tools such as Ranorex and Test.ai.

Alarm aggregation and incident management

Numerous enterprise-level tools exist that can consolidate and analyze data from various sources. While BigPanda is capable of aggregating data from multiple origins, PagerDuty serves as an effective option for DevOps teams requiring on-call management, incident response, event management, and operational analytics.

23. PagerDuty

PageDuty serves as a dispatching service that effectively consolidates alerts while minimizing unnecessary noise. With its user-friendly interface and well-structured data presentation, it facilitates the identification of correlations among various events.

The platform seamlessly integrates with monitoring systems, customer support, API management, and performance management tools. Supporting over 550 integrations, it allows for the connection of nearly any monitoring or log management tool capable of initiating REST calls or sending emails. Notable integrations include AppDynamics, Microsoft Teams, AWS, ServiceNow, and Slack, which you may already be utilizing.

Alternatives to PageDuty encompass Slack, AlertOps, Splunk On-Call, and ServiceNow IT Service Management.

DevOps Monitoring Best Practices

In any DevOps strategy, it is crucial to take into account the best practices associated with DevOps monitoring to ensure the process is developed and implemented effectively from the beginning. Let us explore the best practices that contribute to a successful DevOps monitoring strategy.

Set clear objectives

Establishing clear goals is essential for identifying the appropriate metrics to track and the tools to implement. These objectives can include enhancing system efficiency, reducing downtime, and maximizing resource utilization.

Automation

The core principle of DevOps revolves around automation. Strive to automate as many monitoring activities as possible. By doing so, you reduce the chances of human error, promote consistency, and enable your team to concentrate on more strategic initiatives.

Implement continuous monitoring

Continuous monitoring entails the real-time observation of your systems and applications. This strategy enables the swift detection and resolution of problems before they affect users. Additionally, it supports continuous enhancement and agile development methodologies.

Foster a collaborative environment

Promote collaboration between the development, operations, and security teams. By sharing monitoring data with colleagues, issues can be resolved swiftly, fostering a culture of responsibility.

Employ centralized logging and monitoring

Consolidate your logging and monitoring information to create a single source of truth. Centralized logging streamlines the integration of data from multiple sources, enabling comprehensive analysis and the generation of unified reports. Tools commonly utilized in DevOps for this function include Elasticsearch, Logstash, and Kibana, collectively known as the ELK Stack.

Integrate monitoring with CI/CD pipelines

Incorporate your monitoring tools within your CI/CD pipelines to facilitate ongoing feedback. This integration aids in the prompt identification of issues during development, ensuring that only high-quality code is deployed to production. Automated testing and monitoring assessments can be integrated into the CI/CD process.

Prioritize security monitoring

Incorporate security monitoring within your DevOps workflows to identify and mitigate vulnerabilities. Be vigilant for any unauthorized access, unusual activities, and compliance with security standards.

Set up meaningful alerts

Establish notifications to keep your team informed about urgent matters. Ensure that these notifications offer explicit guidance on the required actions. Implement threshold alerts to oversee specific conditions and predictive alerts to anticipate possible challenges.

Use dashboards and visualizations

Utilize dashboards and visual tools to present monitoring data in a clear and accessible manner. Dashboards provide immediate insights into system health, performance trends, and irregularities. By customizing these dashboards, teams can focus on the metrics that are most relevant to their roles.

Focus on user experience

Prioritize user experience in your monitoring activities. Track user-focused metrics such as response times, error rates, and service uptime. Implement Real User Monitoring (RUM) and synthetic monitoring tools to assess user engagement and satisfaction levels.

Keep track of both infrastructure and applications

Comprehensive monitoring should include both your infrastructure and applications. Infrastructure monitoring focuses on overseeing servers, networks, and storage systems. Application monitoring emphasizes performance, availability, and user experience. This holistic approach guarantees that all elements of your infrastructure are taken into account.

Conduct regular reviews and audits

Regularly review and evaluate your monitoring processes and tools. Assess the effectiveness of your monitoring strategy, make adjustments to the monitoring configurations as needed, and confirm adherence to industry standards and best practices. Ongoing evaluation and enhancement are essential for maintaining an effective monitoring system.

DevOps Monitoring Use Cases

It is essential for every phase of your DevOps production to be transparent. This encompasses a comprehensive overview of the status and operations within your infrastructure platform. Additionally, even the most granular elements of value, such as an individual line of code, require your focus. Let us discuss the key functions involved.

Code linting

Code linting tools evaluate your code for stylistic choices, syntax correctness, and possible errors. Often, they also assess adherence to best practices and coding standards. By utilizing linting, you can identify and resolve issues in your code prior to them leading to runtime errors or other complications. Additionally, linting promotes a clean and uniform codebase.

Git workflow operations

Conflicts in the codebase may arise when multiple developers try to work on the same section of a project simultaneously. Git offers various tools to assist in managing and resolving these conflicts, such as commits and rollbacks. By keeping an eye on git workflow activities for potential conflicts, you can maintain the integrity and consistency of your project.

Continuous Integration (CI) logs

Continuous Integration (CI) logs are essential for assessing the success of your code builds and identifying any errors or warnings that may arise. In the event of errors, it will be necessary to allocate resources for investigation, troubleshooting, and resolution. Furthermore, keeping an eye on your logs can assist in pinpointing potential problems within your build pipeline or codebase that require attention.

Continuous Deployment (CD) pipeline logs

Keeping an eye on your CD logs can offer important information regarding the health and performance of the pipeline. By reviewing these logs, you can diagnose issues with failed deployments and uncover any possible challenges.

Configuration management changelogs

Configuration management changelogs offer essential insights into the status of your systems and significant modifications. By keeping an eye on these logs, you can monitor both manual and automated alterations, detect any unauthorized changes, and address potential issues effectively.

Infrastructure deployment logs

Deployment logs record the timing of new stack deployments and indicate if any have encountered failures. These logs are valuable for diagnosing problems related to stack deployments and for detecting any unauthorized modifications in the infrastructure that could have led to a failure.

Code instrumentation

Code instrumentation involves integrating additional code into your application to gather data regarding its performance and operational flow. By implementing instrumentation, you can track stack calls and observe contextual values. Analyzing the output from code instrumentation enables you to assess the efficiency of your DevOps practices and pinpoint areas requiring enhancement. Furthermore, it can assist in detecting bugs and facilitate the testing process.

Distributed tracing

Distributed tracing plays a vital role in the monitoring and debugging of microservices applications. By gaining insights into the interactions between your applications, typically via APIs, it becomes simpler to pinpoint and resolve issues. Additionally, distributed tracing aids in enhancing application performance by revealing potential bottlenecks.

Application Performance Monitoring (APM)

APM is designed to monitor the performance and availability of applications. This encompasses various aspects such as tracking response times, detecting errors, and employing Real User Monitoring (RUM) to assess the end-user experience, among other functionalities. Utilizing APM solutions enables you to pinpoint and resolve issues proactively, preventing them from impacting the overall system.

API access monitoring

Monitoring and documenting API access and traffic allows you to detect and thwart unauthorized access as well as potential DDoS attacks.

Infrastructure monitoring

Infrastructure monitoring involves overseeing the performance and accessibility of computer systems and networks. Tools designed for infrastructure monitoring can deliver real-time data on various metrics, including CPU usage, disk capacity, memory, and network traffic. These tools are instrumental in detecting resource-related issues before they lead to outages or other complications.

Network monitoring

involves overseeing the performance and accessibility of a computer network along with its various components. Network administrators utilize monitoring tools to detect network-related issues and implement necessary corrective measures. Additionally, network monitoring employs flow logs to pinpoint any potentially suspicious activities.

Synthetic monitoring

Synthetic monitoring refers to a software testing approach that employs virtual models of actual systems and their components. This method allows for the assessment of performance, functionality, and reliability, whether focusing on specific components or evaluating the entire system as a whole.

What should you look for in a DevOps Monitoring Platform?

When considering a solution for DevOps monitoring, the ideal system is one that integrates easily into your workflow. This means the platform of your choice should integrate with the tools your teams use in their development workflow, including:

- Application development tools

- Version control

- CI/CD pipelines

- Cloud services and infrastructure

- Infrastructure-as-code systems

- Ticketing and issue tracking systems

- Meet or adhere to appropriate regulatory framework

- Team collaboration and communications tools

The ideal DevOps monitoring platform will offer native integrations with your tools, or there should be trusted third-party solutions.

It is essential for every team member to have access to real-time data from a monitoring platform, enabling them to proactively identify and eliminate bottlenecks. Your monitoring system should complement your existing automation efforts without hindering them, while also enhancing communication and ensuring security and safety measures are in place. Additionally, seek out reports or dashboards that are user-friendly and accessible at all levels.

These visual representations should contextualize data within a broader system and include dependency maps. Log streams, whether from the cloud or local sources, must seamlessly integrate with all layers of your technology stack and be easy to navigate. The platform should also offer insights into historical trends and anomalies, as well as the capability to correlate events effectively.

The future of DevOps monitoring

The practice of DevOps monitoring is advancing rapidly and is poised for significant growth. With projections indicating that the DevOps market will surpass $20 billion by 2026, the demand for ongoing oversight and enhancement of DevOps practices within organizations is unlikely to diminish. As monitoring tools for DevOps evolve, we can expect greater automation and integration of these solutions. The implementation of shift-left testing will enhance both security and product quality, contributing to the transformation towards DevSecOps.

It is anticipated that more DevOps teams will embrace comprehensive, integrated software development life cycle pipelines, bolstered by appropriate continuous monitoring tools. A Forrester report suggests that specialized DevOps monitoring solutions will facilitate MLOps, unified CI/CD, and CD/RA (continuous delivery and release automation) pipelines, engaging low-code or no-code developers and platforms. Furthermore, there is an expectation that monitoring tools in the DevOps space will expand to encompass network edge devices.

Given the rapid changes already underway, it is reasonable to conclude that DevOps monitoring will continue to evolve, enhancing collaboration among development teams, IT departments, and the broader business landscape for the foreseeable future.